Artificial Intelligence and Cybersecurity: Opportunities and Risks

In today’s internet-dominated world, the presence of AI is pervasive, and its influence on cybersecurity is unsurprising, as indicated by recent surveys conducted by experts.

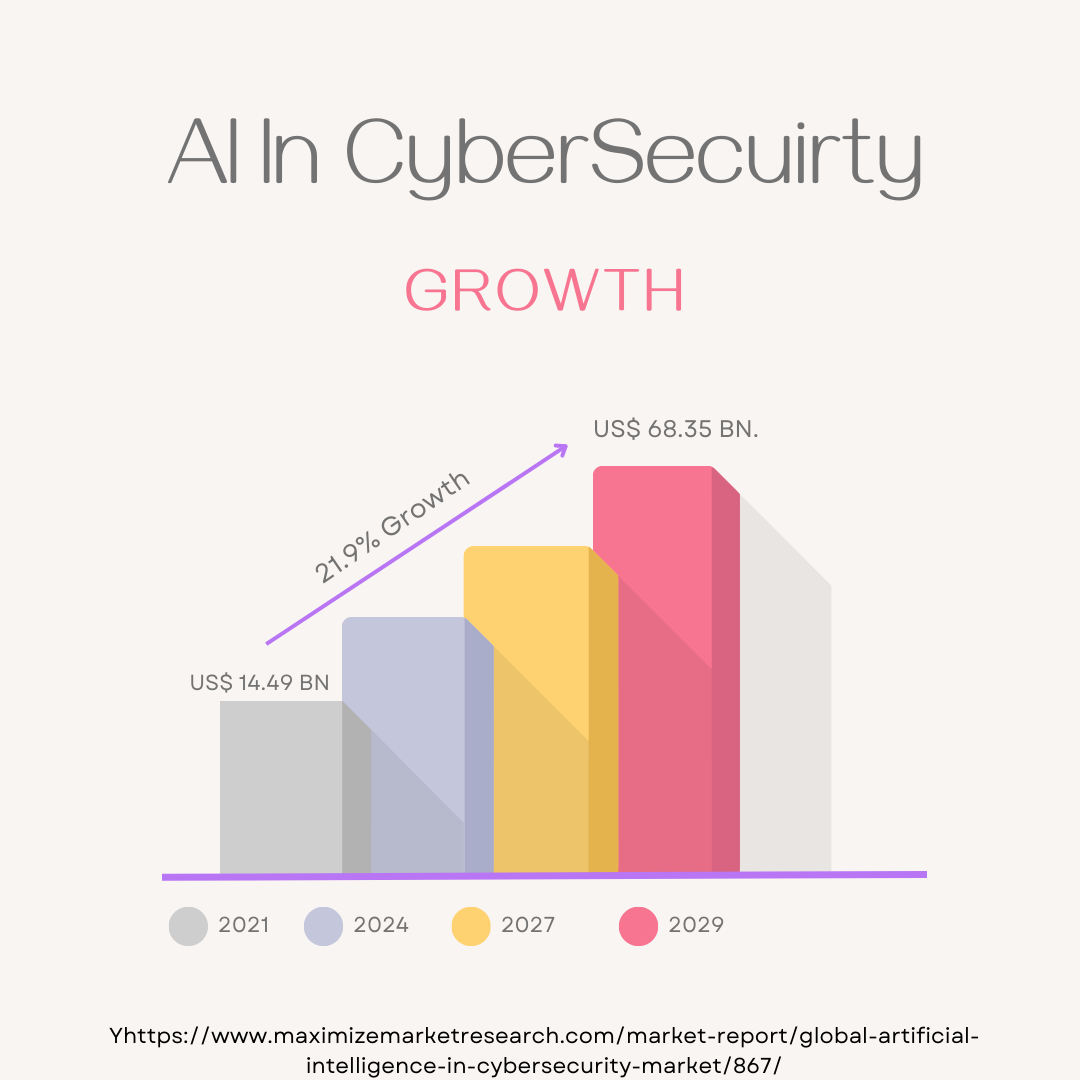

Artificial Intelligence in the CyberSecurity market was estimated to be worth US$ 14.49 billion in 2021, and it is predicted that from 2022 to 2029, the entire income from artificial intelligence in cybersecurity will increase by 21.4%, or roughly US$ 68.35 billion.

How Artificial Intelligence is Revolutionizing the Cybersecurity Market

Artificial intelligence is the ideal cybersecurity option for businesses that wish to prosper online. For security professionals to operate effectively and defend their organizations from cyberattacks, cutting-edge technology such as AI is a must. It offers malware alerts, recognizes novel dangers, and safeguards crucial company data. The adoption of AI is most advanced in the information technology and telecommunications industries.

A recent global assessment of over 4,500 technology decision-makers from various industries found that 29% of SMEs and 45% of major corporations had adopted AI. Artificial intelligence is already gaining traction in cybersecurity to protect data, and it is capable of quickly analyzing millions of data sets and locating a variety of cyber-attacks. As a result, over the forecast period, artificial intelligence use has been steadily increasing in the cybersecurity industry.

Looking at the stats, AI is on its way to a 21.9% growth by 2029 making its impact on the cybersecurity departments making it an important part of security researchers as we as the cybersecurity organizations.

Impact of AI in CyberSecurity: Promises and Perils

Speaking on this topic and by looking at the statistics of its market we can say that AI presents both opportunities and plenty of risks to both people and organizations in cybersecurity. we will look at an example related to AI, the recently launched ChatGPT that has taken the world by storm.

Artificial intelligence is developing in previously unheard-of ways to address fascinating new issues. But do the benefits outweigh the potential hazards and concerns as AI is used in crucial cybersecurity operations?

“The problem people don’t realize is that ChatGPT, being a new, shiny object, it’s all the craze that’s about. But the problem is that most of the content that’s produced either by ChatGPT or others are assets with no warranties, accountability or whatsoever. If it is content, it’s OK. But if it is something like code that you use, then it’s mostly not.”

Andy Thurai

Vice President and Principal Analyst, Constellation Research Inc.

As highlighted by Andy AI can play both positive and negative aspects in cybersecurity it’s up to the people how they want to use AI in cybersecurity. many companies have shifted to AI and ML models for better security experience and some hackers have used AI such as Fake OpenAi extensions or Apps to lure people in just to steal their data or sometimes hit their financial assets.

Our Readers ALSO READ

Opportunities of AI in CyberSecurity

To begin with, the notable advancements in AI have significantly enhanced security measures. Attendees of RSA2023 witnessed numerous companies introducing innovative AI and ML solutions, including HiddenLayer, the winner of the Sandbox2023 event at RSA2023. This standout tool focused on threat intelligence, harnessing a combination of AI and machine learning algorithms. Such developments highlight the positive prospects AI brings to cybersecurity for companies.

Let’s explore several cybersecurity categories where AI is making remarkable advancements.

Threat Hunting

Traditional security methods use signatures or indicators of compromise to identify threats, which works well for known threats but falls short for unidentified threats. While AI can enhance detection rates to 95% by reducing false positives, signature-based approaches now detect about 90% of threats. By combining traditional approaches with AI, it is possible to achieve a 100% detection rate while further minimizing false positives. Furthermore, incorporating behavioral analysis through AI can bolster businesses’ threat-hunting procedures, improving their overall security posture.

Vulnerability Management

There were 20,362 new vulnerabilities disclosed in 2019, a 17.8% increase over 2018. As a consequence of this surge, organizations encounter challenges in effectively prioritizing and managing these vulnerabilities. High-risk vulnerabilities are frequently ignored by traditional methods until they are already being exploited. Utilizing AI and machine learning methods, such as User and Event Behavioural Analytics (UEBA), can analyze normal behavior and spot out-of-the-ordinary activities, which could be a sign of unidentified zero-day attacks. Organizations are protected by this proactive approach even before vulnerabilities are formally reported and fixed.

Network Security

Two time-consuming aspects of traditional network security involve the development of security rules and understanding the organization’s network topology. Security policies enable the identification of genuine network connections and provide a means to closely scrutinize those that may exhibit signs of malicious activity. These rules are essential for implementing a zero-trust model effectively. However, due to the multitude of networks, defining and maintaining such policies becomes a significant challenge.

Moreover, when it comes to network topography, most businesses lack standardized naming patterns for their applications and workloads. Consequently, security teams must invest considerable time and effort in determining the associations between specific applications and groups of workloads.

By studying network traffic patterns and advising both functional groupings of workloads and security policy, businesses can use AI to enhance network security.

Risks and Drawbacks of AI in CyberSecurity

While AI provides positive algorithms and techniques for companies to enhance security, it also paves the way for hackers and attackers to exploit victims using AI as stated above.

“Hackers are already using these tools to individualize content, and it’s not just ChatGPT, One of the things that you are able to easily identify when you’re looking at the emails that come in from a phishing attack is you look at some of the key elements in it, whether it’s human or automated AI content. But these tools have mastered the individualization of content to mimic natural human behavior.”

Andy Thurai

Vice President and Principal Analyst, Constellation Research Inc

Andy Thurai said this while speaking with theCUBE industry analyst Dave Vellante at CloudNativeSecurityCon, during an exclusive broadcast on theCUBE, SiliconANGLE Media’s live streaming studio and we quote it from their website SiliconANGLE, regarding the influence of AI in cybersecurity.

When discussing the utilization of AI in exploitation, one of the most significant threats is the potential for bias. The objectivity of AI algorithms heavily relies on the data they are trained on. If the training data contains biases, the AI is likely to produce biased results. This bias can lead to the emergence of false positives, false negatives, and other errors that pose a risk to cybersecurity.

Cybercriminals could utilize AI to launch more complex attacks, which is another risk. AI can be used for making phishing emails that are more difficult to spot and more lifelike. Data breaches and successful attacks can rise as a result.

Final Words on AI in CyberSecurity

Although AI has the ability to completely transform the cybersecurity industry, it also carries threats that need to be properly examined. Companies should be mindful of the inherent biases in AI algorithms and take precautions to make sure their AI systems are trained on impartial data.

Despite the dangers, AI in cybersecurity offers enormous opportunities. Organizations may better protect themselves against cyberattacks by utilizing AI to automate operations and speed up incident reaction times. The key to using AI effectively is to use it ethically and with a clear grasp of the possible hazards and advantages, like with any technology.

Found this article insightful, Follow us on our LinkedIn and Facebook handles for more exclusive content like this.