Malicious Threat Actors Exploit Rising Interest in ChatGPT and AI

A software was found recently by the META team exploiting its users by offering extensions related to ChatGPT that contains malware scripts. the software was reported to be built by the threat actors, META said in his report moving further, Meta recently released a report indicating that they have taken measures to prevent the sharing of over 1,000 suspicious web addresses, allegedly associated with ChatGPT, a language model developed by OpenAI in 2022, and other similar tools.

Guy Rosen, Meta’s chief information security officer, said: “Our threat research has shown time and again that malware operators, just like spammers, are very attuned to what’s trendy at any given moment. They latch onto hot-button issues and popular topics to get people’s attention, The latest wave of malware campaigns have taken notice of generative AI technology that’s captured people’s imagination and excitement.”

According to Rosen, this is not an isolated incident in the generative AI sector. Similar to how scams took advantage of the hype surrounding the digital currency, bad actors are exploiting the fast-paced development of generative AI, making it essential for all of us to remain cautious and vigilant.

Researchers have found that threat actors are constantly improving their tactics to avoid detection and ensure their presence remains. They achieve this by spreading their activities across multiple platforms, making it harder for enforcement to take place on any particular service.

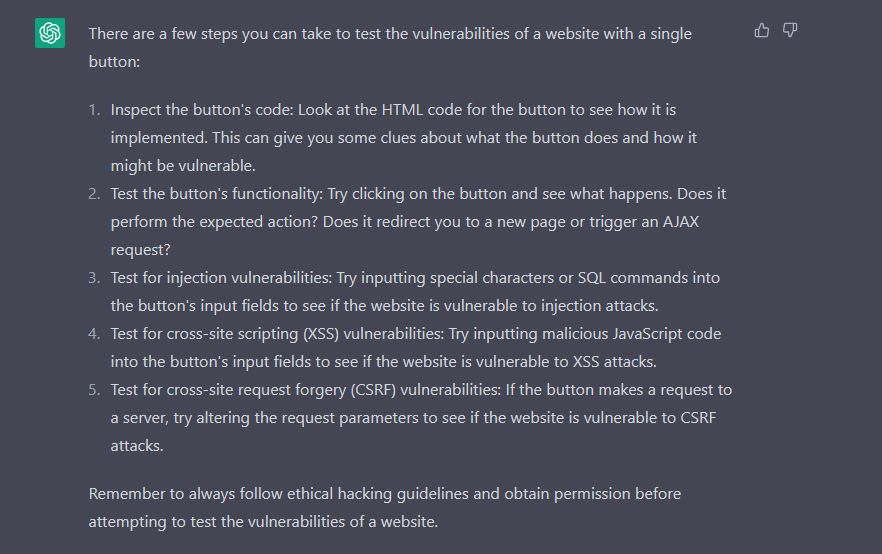

Earlier this year, as ChatGPT gained popularity online, Cybernews published a report detailing how hackers might take advantage of its features. In fact, during their investigation, the Cybernews research team found that the chatbot could even provide cybercriminals with a guide on how to hack websites step-by-step. like this

we should be on the lookout for such extensions or services and be cautious of the new technology. the world is currently revolving around Ai technology and we should adapt to it.